Today, effective email marketing is more crucial than ever. But even the best email strategies can fall short if you don’t experiment and optimize. Enter A/B testing—a powerful tool that allows marketers to refine their email marketing strategy by comparing two versions of an email to see which performs better.

In this blog, we’ll dive into a comprehensive A/B testing strategy for email marketing. You’ll learn which elements to test before hitting “send,” how to optimize during a campaign, and best practices for continuous improvement. By following these guidelines, your email marketing campaigns will convert better, increase open rates, and ultimately drive more revenue.

What is Email Marketing A/B Testing?

A/B testing (or split testing) is a method where two versions of an email are sent to small sample groups of your audience to determine which version performs better. Once the test results are in, the best-performing email is sent to the larger audience, maximizing the impact of the campaign. The goal is to optimize the following metrics:

- Open rates

- Click-through rates (CTR)

- Conversion rates

- Unsubscribe rates

A/B testing offers data-driven insights to help you tailor your content, design, and approach to resonate with your audience.

Why Should You A/B Test Your Email Campaigns?

Email marketing is not a one-size-fits-all approach. Each audience, industry, and demographic behaves differently. Without testing, you’re essentially guessing what will work, which is inefficient and costly. Here are the key benefits of A/B testing your email campaigns:

- Improve Engagement: Test different subject lines, content, and call-to-actions to see which winning version encourages higher engagement.

- Optimize ROI: Understanding what resonates with your audience helps you allocate resources effectively.

- Reduce Unsubscribes: A/B testing can help you identify what elements turn users off so you can prevent them from unsubscribing.

- Increase Conversion Rates: Whether it’s promoting a product or a service, A/B testing refines the customer journey to increase conversions.

Elements to Test Before Sending Your Email Campaign

Before launching your email campaign, several elements can (and should) be tested to ensure your email is optimized for success. Here’s a comprehensive list of items you should A/B test in future email campaigns:

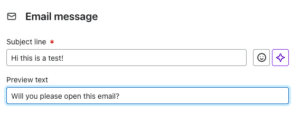

1. Subject Lines

Subject lines have a direct impact on your open rates. Some elements to test include:

- Length: Test between shorter (under 50 characters) and longer subject lines.

- Personalization: Compare generic subject lines with those that include the recipient’s name or other personal details.

- Tone: Formal vs. informal language.

- Urgency: Adding words that create FOMO (fear of missing out), such as “limited time” or “today only.”

- Questions: Subject lines that ask a question vs. those that make a statement.

2. Preview Text

Preview text is the snippet that appears alongside or below the subject line in most email clients. Test for:

- Length: Shorter vs. longer preview texts.

- Content Alignment: Does it complement the subject line or offer additional value?

3. From Name

The “from” name builds trust and recognition. Elements to test:

- Company Name vs. Individual: Should it come from the brand (e.g., “Elevato”) or a person (e.g., “John at Elevato”)?

- Familiarity: A known person vs. an unfamiliar sender.

4. Send Time and Day

Different audiences may engage with your emails at different times of day or days of the week. Test:

- Morning vs. Afternoon vs. Evening

- Weekdays vs. Weekends

- Midweek (Tuesday to Thursday) vs. Start/End of Week

5. Call to Action (CTA)

Your CTA drives the desired action. Experiment with:

- CTA Text: Action words like “Shop Now” vs. “Learn More.”

- Button vs. Text Link: Do people prefer clicking on buttons or text links?

- Placement: Above-the-fold CTA vs. below-the-fold.

6. Email Design

A good design can make or break engagement. Test:

- Single Column vs. Multi-Column Layout: Does a simpler or more complex design perform better?

- Visual Hierarchy: Emphasize different elements (like headlines vs. images) to see what grabs attention.

- Font Style and Size: Traditional fonts vs. modern, large text vs. small.

- Image-to-Text Ratio: Does your audience prefer more visual or text-heavy emails?

- Mobile vs. Desktop Optimization: Do certain designs work better on mobile vs. desktop?

7. Email Length

Some audiences prefer longer, detailed emails, while others might engage more with concise messaging. Test:

- Short vs. Long Emails: A brief intro with one CTA vs. a longer email that includes multiple CTAs.

8. Personalization

Does adding personal details like the recipient’s name, location, or recent purchase history improve engagement? Test:

- Personalized Greeting vs. Generic

- Personalized Recommendations vs. General Offers

9. Content Type

The type of content you include in your email matters. Test:

- Text-heavy Emails vs. Image-heavy Emails

- Educational Content vs. Promotional Offers

- Customer Stories vs. Product Spotlights

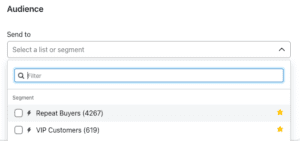

10. Segmented Audience vs. General List

Send one email to a segmented portion of your list (e.g., based on behavior, geography, or purchase history) and one to your general audience. Analyze whether segmentation impacts performance.

Items to A/B Test During the Campaign Life Cycle

Once your email campaign is live, testing doesn’t stop. Here’s what to test during the campaign’s life cycle:

1. Send Frequency

Determine if sending emails more or less frequently impacts your open rates, CTR, or unsubscribes. Test:

- Once a week vs. Once a month

- Multiple emails in a short period (e.g., during a sale) vs. Less frequent

2. Follow-up Emails

Test sending follow-up emails to those who didn’t open or click the original email. Elements to test:

- Timing: Send a follow-up within 24 hours vs. 48-72 hours after the initial email.

- Follow-up Content: Resend the same email vs. a new version with modified content or CTA.

3. Dynamic Content vs. Static Content

Dynamic content adapts based on the recipient’s data, while static content remains the same for everyone. Test:

- Product Recommendations Based on Browsing History vs. Static Offers

- Dynamic Welcome Emails vs. Static Introduction

4. Email Sequence Testing

When sending a series of emails, such as during a welcome series or drip campaign, test the structure and timing of your emails to monitor customer behavior:

- Short vs. Long Sequences

- Time Gaps Between Emails

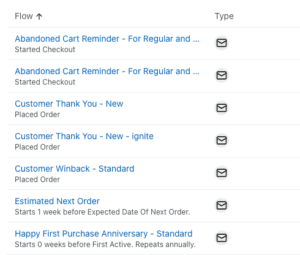

5. Cart Abandonment Email Testing

Cart abandonment emails can recover lost sales. Test:

- Number of Emails: Single email reminder vs. a sequence of 2-3 reminders.

- Incentive Testing: Offering a discount in the email vs. no discount.

General Best Practices for Email A/B Testing

A/B testing requires strategic planning and a clear understanding of what you aim to achieve. Below are general best practices to guide you:

1. Test One Variable at a Time

To isolate the cause of success or failure, only test one variable at a time (e.g., email subject line or CTA). This ensures that the results are easy to interpret.

2. Select a Sizable Sample

Make sure your sample size is large enough to generate statistically significant results. Typically, 10-20% of your total email list is sufficient for A/B testing.

3. Define Your Success Metric

Determine what constitutes a successful test upfront. Are you aiming to increase open rates, CTR, or conversions? Knowing your primary goal helps refine your test parameters.

4. Run Tests for a Sufficient Time

Don’t jump to conclusions too quickly. Allow your test to run for an adequate amount of time—usually between 24 and 48 hours—to gather meaningful data.

5. Keep Track of Historical Data

Maintain a history of your tests and their outcomes. This helps identify trends over time and enables smarter future tests.

6. Test with Control Groups

Create a control group that receives the original version of your email while the rest of your audience gets the variant. This provides a baseline for comparison.

7. Leverage Automation Tools

Use automation tools that allow for real-time optimization based on test results. Platforms like Mailchimp, HubSpot, or Klaviyo offer A/B testing functionalities to streamline the process.

8. Analyze and Iterate

After the test, analyze the results and apply the findings to your future campaigns. Consistent optimization leads to better long-term results.

The Bottom Line

A/B testing is an indispensable tool in email marketing that allows you to make data-driven decisions, maximize ROI, and continuously improve campaign performance. By thoroughly testing various elements before sending and throughout the campaign’s life cycle, you can better understand your audience and fine-tune your emails for higher engagement and conversions.

At Elevato, we recommend that you continuously experiment with different variables, leverage automation tools, and always keep your audience at the center of your strategy. Implementing a robust A/B testing plan can turn good email campaigns into great ones, providing a measurable impact on your marketing success.

Remember, email marketing isn’t static—neither should your testing strategy be. Keep evolving with each campaign, and the results will speak for themselves. Contact us to schedule a free consultation!